I read this classic book recently and I thought it was good enough to write down my thoughts about it. These are put together without real form – they are rough, but I’m not trying to write an essay. Also, if you want to understand me, you probably should read the book first.

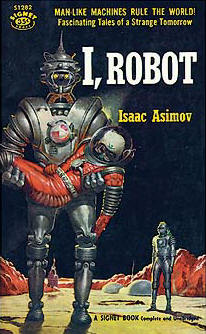

Here’s a summary: I, Robot is a collection of short stories within the same “universe” in the future. They all involve Dr. Susan Calvin, a “robopsychologist,” in some way, as she analyzes the problems with the robots in the scenarios. She works for U.S. Robots and Mechanical Men Inc., a company that revolutionized robotics and made the “positronic brain,” the mechanism or device that allows robots to think on the level of humans. But, since humans made the robots basically from scratch, there are overlooked, complex problems that arise. The short stories involve various situations that could happen, such as an advanced robot that thinks humans are inferior, robots being confronted with paradoxes or contradictions, or problems with allowing robots to deal with more danger. One strong element that the book is remembered for is Asimov’s 3 Laws of Robotics, which were his thoughts on how we would have to deal with robots and make them safe. Roughly: robots can’t harm a human being, they must obey human orders, and they must be able to protect their own existence, but in that order of priority.

-Asimov does express well the idea of robots being common, to the point that the humans take them for granted.

-reporter and Dr. Calvin talk about the time before robots, reporter doesn’t recognize it

-the family in the beginning and the scientists who aren’t roboticists deal with robots as if they don’t care about interacting with them on a deeper level, where they understand what is going on in the robot. We can see this today too with robots, but now people will just shy away and not interact at all. Asimov shows how it would be like if robots became much more “common.”

-On that last thought, the people don’t recognize the importance of what is going on when something goes wrong.

- Even though the robots are so variable and flexible to understand human thinking, they should be precise (by today’s standards).

- Breaking the 3 laws means something is seriously wrong in that world, and there should be more safeguards against it.

-The humans shouldn’t get annoyed and act like the robot is just “stupid,” or like their coffee maker broke and they can’t have their coffee.

-We see this today with cars and computers, but these robots are much more complex and concern more important implications. It’s like a fighter jet that breaks in flight and the pilot has to figure out what happened and land quickly because his life is in danger.

-The technology:

-The positronic brain (Data!) is truly this fix-all technology. Asimov doesn’t really even consider that it is a powerful computer. Multiple indications show that it is not computer-based, specifically that it isn’t really “programmed,” it is mostly just “made.”

-The name implies it is a quantum computer, allowing for smaller, faster, and more natural computing. This seems plausible (people are researching it now). But only if it is based off the positron particle, or some principle or interaction related to it. or only using the positron briefly on in a reaction. This is because the positron is an antiparticle (the “opposite” of an electron) and annihilates when it touches other matter. It would seem difficult to build with and use.

-The emphasis on mechanics and mathematics instead of electronics and programming is clear. The robots are very mechanical, use more metal instead of some plastic here or there, not much mention of electronics, wires, or circuits (though I don’t think people were that far in electronics when this book was published). A lot of gears, etc. are mentioned, instead of what we would find prevalent in robots today.

-The don’t realize how prevalent computers would become! “Oh, these calculations are so difficult! They must have a computer here in this facility that we can use!” “When the drawing board and slide-rule men said it was ok…” That’s why sometimes the robots are so wondrous, because Asimov didn’t really know how they could be made. He really only knew that we would have to recreate humans from scratch, so to speak, and there are many emotional things that are difficult and would cause problems.

-I find it interesting how the first “talking robots” were a big advancement, and that robots with emotion came first. Now a days we would think it would be the other way around, that we would have talking robots that didn’t fully understand what they were saying because they didn’t have emotion, but that robots with emotion would come later.

-The 3 Laws are “built” into the brain. Human actions are so complex, I don’t know how this could be accomplished. It’s difficult enough to make a machine that understands and truly copies human qualities, but I think more difficult is that it recognizes bad situations on its own. (But I guess if you have wondrous technology that can do the former, it could do many other difficult things too).

-The robots go “insane” when confronted with a contradiction or paradox concerning the 3 laws. Modern robots would likely have better, careful safeguards and would be able to recognize when something is wrong, either on a large or small scale. It would be testing itself and communicate with humans if something went wrong.

-The robots are so good at displaying emotion that they do it in extraneous ways. “Oh my, hurt coming to humans! My goodness, what a thought!” I think modern robots would be much more precise and not frivolous.

No comments:

Post a Comment